Azure SQL VM Best Practices: Storage

- Rohit Kumar

- Aug 2, 2021

- 7 min read

STORAGE:

Microsoft Azure offers seven types of storage options: Block Blobs, Azure Data Lake Storage, Managed Disks, Files, Queues, Page Blobs. In general, we can use them for tasks as shown in the below screenshot:

Also, Disks can be classified as Managed(New and More feature-rich) and Unmanaged (older/Legacy type) disks; for our Azure SQL VM scenario here, we will emphasize Managed Disks.

First Thing First, currently, there are four types of managed storage disk options available in Azure.

1. Standard HDD (S4 - S80)

2. Standard SSD (E1 - E80)

3. Premium SSD (P1 – P80)

4. Ultra Disks

When evaluating the disks for our workloads, the primary consideration should be Latency, IOPS, and Bandwidth. The following table provides the insight comparison on these storage options:

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/disks-types

As shown in the above screenshot Standard HDD and Standard SSD are more suited for Dev/Test Environment, Backups, or workloads that are less sensitive to performance, simply because they do not have enough IOPS or Throughput. They are not ideal for performance-sensitive workloads. Also, performance may vary depending on other factors.

To learn more on managed disks, check out Microsoft Storage documentation. (https://docs.microsoft.com/en-us/azure/virtual-machines/disks-types#premium-ssd)

So, we will start with Premium Options.

Premium SSD:

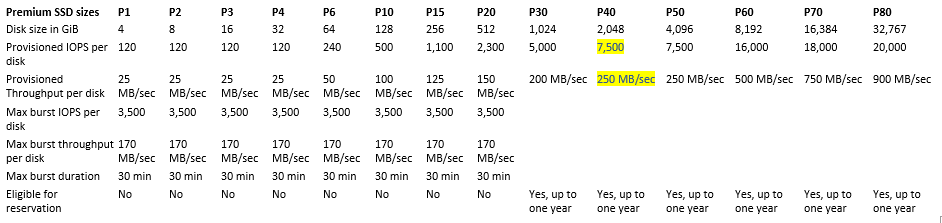

Azure premium SSDs provide high-performance and low-latency disk support for virtual machines (VMs) with input/output (IO)-intensive workloads. Premium SSDs fit for mission-critical production applications. Premium SSD disks are designed to give low single-digit millisecond latencies and target IOPS and throughput specified in the table below for 99.9% of the time. Premium SSDs can only be used with VM series that are premium storage compatible.

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/disks-types#premium-ssd

When you provision a premium storage disk, unlike standard storage, you are guaranteed the capacity, IOPS, and throughput of that disk. For example, if you create a P40 disk, Azure provisions 2,048-GB storage capacity, 7,500 IOPS, and 250-MB/s throughput for that disk. Your Application can use all or part of the space and performance. Also, Premium disks have an option of disk reservation starting P30.

What are Azure disk reservations? Disk reservation is the option to buy one year of disk storage in advance, decreasing your total cost. Azure disk reservation provides the opportunity to purchase Premium SSDs in the specified SKUs from P30 (1 TiB) up to P80 (32 TiB) for a one-year term. There is no restriction on the least amount of disks required to purchase a disk reservation. Additionally, you can choose to pay with a single, upfront payment or monthly payment. To learn more on disk reservation, follow Microsoft documentation.

Ultra Disks:

Azure ultra disks produce high throughput, high IOPS, and consistent low latency disk storage for Azure VMs. Generally speaking, these are ideal for the applications that have to deal with very high data traffic, such as Banking Scenarios, Retail Stores, or a hyper sport like Formula 1 (most probably). The IOPS for these can scale from 1,200 to 160,000 at 300 IOPS/GiB. These are best suited for organizations/scenarios that want the best of performance at all times. Also, using Ultra disk is the ability to change the configuration on the fly without restarting the VM. At any point in time when needed, the configuration can be dialed higher or lower depending on the requirement, and this can be done on three parameters (Capacity, IOPS, or Bandwidth). Ultra disks are suited for data-intensive workloads such as SAP HANA, top-tier databases, and transaction-heavy workloads.

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/disks-types#premium-ssd

There are also some drawbacks associated with Ultra Disks.

These are only supported in certain regions, so Not every VM size is available in every supported area with ultra disks.

Caching is not supported for reads and writes.

They are only available as data disks.

It can only be created as empty disks.

It doesn't currently support disk snapshots, disk export, changing disk type, VM images, availability sets, Azure Dedicated Hosts, or Azure disk encryption.

It doesn't support integration with Azure Backup or Azure Site Recovery.

Support 4k physical sector size by default. 512E sector size is available as a public offering (no sign-up required). Most applications are compatible with 4k sector sizes, but some require 512-byte sector sizes. One example would be Oracle Database, which requires release 12.2 or later to support the 4k native disks. For older versions of Oracle DB, 512-byte sector size is needed.

To learn more on Ultra disks, you can refer to Microsoft documentation.

Now let us get back to the Storage configuration of Azure SQL VM; storage is crucial for Azure SQL VM performance, so create different storage pools for Data files, Logs, and temp DB.

Best Practices includes

-Temp DB on the local or ephemeral drive (Cached and Temp Storage).

-Data files should be premium storage (P30 – P40 recommended) and should have caching enabled.

-Log storage should be fast, and caching should be disabled.

With the suitable Disks opted for our production workloads, we must choose the right VM to make optimal use of the underlying storage and avoid any bottleneck because VM's have their own Storage I/O limits. When we get this selection incorrect, either we face VM I/O capped or Disk I/O capped situations. Remember, in any case of over and under-provisioning of resources, we will lose on performance.

To avoid this-

Before starting anything, get the baseline metrics for the required VM. IOPS, Throughput, Latency, Memory, CPU can be measured using Azure Monitor or Perfmon tool.

Also important is to start with storage and move to VM as resizing storage is much more complex than resizing VM.

VM IO Capping:

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/dv3-dsv3-series

In this, Bandwidth that the Application requires is restricted at the VM level.

The solution here is to Enable Host Caching(Bringing storage closer to VM), Upgrade VM or VM Level Bursting if the load is for short periods.

DISK IO Capping:

In this, Bandwidth that the Application requires is restricted at the Disk level.

The solution is to add more data disks, Enabling Caching or Disk Level Bursting if the load is for short periods.

Let's discuss Caching and Bursting a bit:

Caching:

Caching is a technique that improves the performance and scalability of a system. It does this by temporarily copying frequently accessed data to fast storage close to the Application.

Here we have different scenarios of reading/Write caching. Let's discuss them in detail-

Read-only caching with data not available in cache- Here, since information is not available, it will be fetched from disk and sent to the Application. The performance will be the same as no caching.

Read-only caching with data available in cache- Since data is available in the cache, it will be directly sent as output—no need to access the disk for its fast.

Read-only caching with write operation- In here, data will be written to cache and disk.

Read/Write caching (Not recommended)- Since read throughput can take advantage of caching but write throughput read is the same as reading caching, but when write happens, it is written to the cache, and then as a background process, it is written to disk as a lazy process. So, this can lead to data loss if things go wrong due to any reason.

As in Azure VM, we plan to use distributed disk structure; we should follow different caching techniques on different types of disks:

– use read caching for the data drives/storage pools

– use no caching for the log drives/storage pools

– use read caching for the temp DB drives/storage pools

Read throughput can take advantage of caching but write throughput cannot, so we plan accordingly.

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/dv3-dsv3-series

Example: As shown in the above table, we use Standard_D48s_v3 machine in Uncached disk throughput environment it can handle 76800 IOPS and 1152 MBps of data, somehow if our data load increases and we start to lose on these counts, we will be stuck with Underperforming VM, in this case, we will have to upgrade our system. But if we use host caching, we can increase our machine bandwidth significantly to 96000 IOPS and 768 MBps.

Moreover, this service is free of cost, so we lose resources if we do not use caching.

To better understand which VM's support host caching, Please see Microsoft Documentation here.

Host Caching can be enabled just by enabling Read-Only caching in the drive options.

Note: Drive that is 4TB or Higher in Size does not support caching. If multiple disks are attached to your VM, each smaller than 4 TiB disks will support caching.

Also, Changing the cache setting of an Azure disk detaches and re-attaches the target disk. If it is the operating system disk, the VM is restarted.

Bursting: (Although not relevant in our SQL VM scenario but still worth reading for disk scenarios from P1 to P20).

Boosting disk storage or Virtual Machines IOPS and MB/s performance is referred to as Bursting. It allows resources to go above their provisioned limit for 30 minutes per day. You can effectively leverage VM and disk bursting to achieve better performance on both your VMs and disk.

Azure premium SSDs offer two models of Bursting:

On-demand bursting model.

Credit-based model

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/disk-bursting#disk-level-bursting

At the disk level, Bursting can be achieved from P1 to P20. This is enabled by default.

Image reference: https://docs.microsoft.com/en-us/azure/virtual-machines/disks-types#premium-ssd

At Machine Level, Bursting only uses the credit-based model; it is enabled by default for all VMs that support it.

https://docs.microsoft.com/en-us/azure/virtual-machines/lsv2-series

To learn more on Bursting, refer to Microsoft Documentation here. It is essential to know which level the performance is hampered (VM Capped or Disk Capped). For this, we can benefit from IO Disk Utilization Matrix or VM Utilization Matrix.

These are the optimization features available in Azure and can be used with disks or VM.

To learn More on Utilization Matrix, check out Microsoft documentation.

SUMMARY & BEST PRACTICES

As a rule of thumb, start with storage and then move towards VM.

Get the storage configuration optimization done. Example - Enable caching, Disk striping, Enable Write Accelerator, Use Resource Manager templates from Workload optimization settings as shown below.

Avoid using OS or temporary disks for database storage or logging.

Enable read caching on the disk hosting the data files and TempDB.

Do not enable caching on the disk hosting the log file.

Use the new generation machines and disks.

Keep the storage account and SQL Server VM in the same region.

Do not miss the SQL I/O best practices, same as a dba performs and Apply SQL Server performance fixes.

Stripe multiple Azure data disks to get increased IO throughput.

I/O Enable database page compression.

Enable instant file initialization for data files.

Limit or disable auto growth on the database.

Disable auto shrink on the database.

Move all databases to data disks, including system databases.

Move SQL Server error log and trace file directories to data disks.

Enable locked pages as locking memory pages may boost performance when paging memory to disk is expected.

CONCLUSION:

Microsoft offers some of the above-mentioned services (Utilization Matrix) for free and some of the above-mentioned practices as default, so while setting up an Azure SQL VM, it is very much important to go through the Microsoft documentation thoroughly and know the base requirements for the VM also remember resource group can be altered anytime but testing the VM for production workloads before deployment saves us on resource, time and cost involved as too much over or under-provisioning of resources will only lead to losses.

Also, as a recommendation, it is suggested to slightly under provision a resource and then

based on our experience, increase the underlying resource.

Author: Rohit Kumar

Microsoft Certified Azure Data Engineer Associate

Comments